OVB Network Diagram and Update

Status

It's been a while since my last OVB update, and I've been meaning to post a network diagram for quite a while now so I figured I would combine the two.

In general, the news is good. Not only have I continued to use OVB for my primary TripleO development environment, but I also know of at least a couple other people who have done successful deployments with OVB. There is also some serious planning going on around how to switch the TripleO CI cloud over to OVB to make it more flexible and maintainable.

OVB is also officially not a one man show anymore. Steve Baker has actually been contributing for a while, and has made some significant changes as of late, including the ability to use a single BMC instance to manage a large number of baremetal instances. This helps OVB make even better use of the hardware available. He also gets credit for a lot of the improvements to the documentation, which now exists and can be found in the Github repo.

At some point I want to move this work into the big tent so we can start using the upstream Gerrit and general infrastructure, but the project likely requires some extra work before that can happen (unit tests would seem like a prereq, for example). I (or someone else...subtle hint ;-) also need to sit down and do the work to get all of this working in regular public clouds. Because I've had a few people ask about this recently, I started an etherpad about the work required to upstream OVB. Feel free to jump on any of the tasks listed there. :-)

Network Diagram

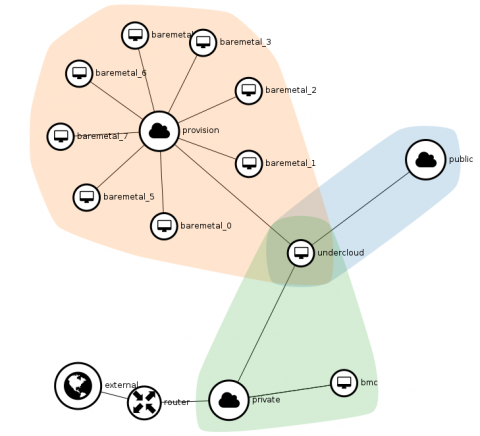

Attached to this post is a network diagram generated with the slick new Network Topology page in Horizon. In it, you can see a few things:

- At the bottom left, we see the not terribly interesting external and private Neutron networks, connected by a Neutron router. This is pretty standard stuff that you would see in almost any OpenStack installation.

- At the bottom right, we see the first OVB-specific item: the bmc. As noted above, this is a single instance that has a number of openstackbmc services running on it. These services respond to IPMI commands, and control the baremetal instances in the upper left of the diagram. Note that it does not have to be on the same network as the baremetal instances because it never talks to them. All it needs is a way to talk to the host cloud, which it gets through the external network.

- Around the middle of the diagram we see the undercloud instance. This is just a regular OpenStack instance that can be used to do baremetal-style deployments to the baremetal vms. In my case it's a TripleO undercloud, but you could use other baremetal deployment tools instead. Note that this instance is on both the private network (so you can access it via a floating ip, and so it has access to the bmc instance) and the provision network (which is an isolated Neutron network over which the baremetal instances can be provisioned). In this diagram it's also attached to a public network, which isn't being used in this case, but could also be attached to the baremetal instances to better simulate what a real baremetal environment might look like.

In any case, I hope this visualization of the network architecture of an OVB deployment helps anyone new to the project who might be having trouble wrapping their head around what's going on. As always, feel free to contact me if you want to discuss anything further.

- Log in to post comments